We know that correlation does not necessarily mean causation. But sometimes you gotta wonder, especially as Mr. Trump’s administration is busily dismantling the CIA. First, GW Bush ignore the PDB of August 8, 2001, on “Bin Laden determined to strike in US”. Then Trump 1.0 eliminates the NSC directorate on pandemic preparedness before the Covid-19 pandemic (and apparently with the exception of Peter Navarro(!) ignores warnings about Covid).

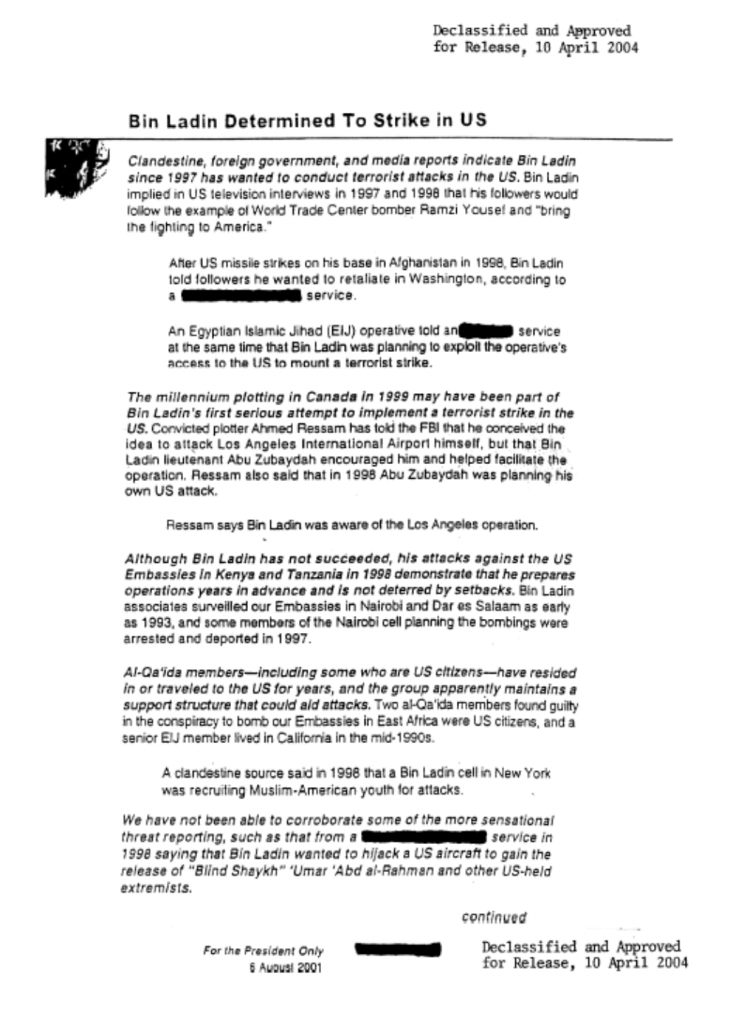

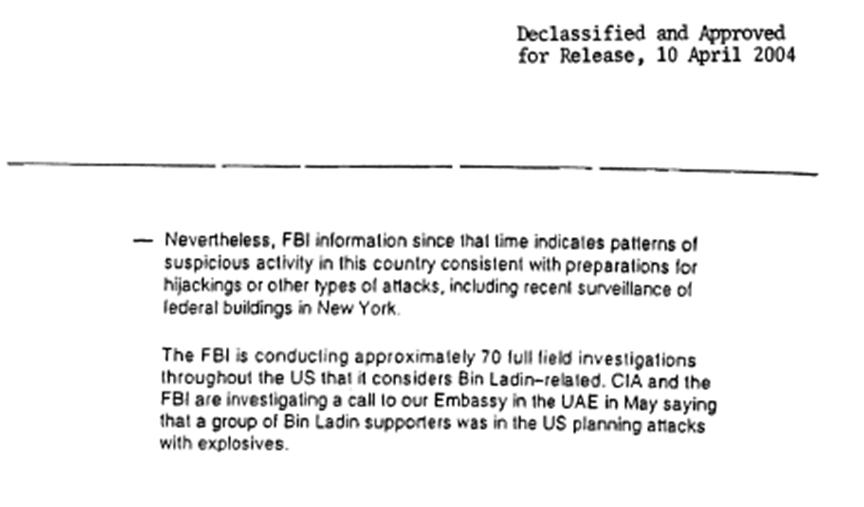

The PDF of the entire memo (redacted of course), delivered 36 days before 9/11, is here.

Now, Mr. Trump’s administration is going full throttle to eliminate (once again) early warning systems against threats to the United States. What’s coming next?

Populism elevates personal intuition above fact. Experts (the real ones) traffic in facts, and populists are mostly anti-expertise. Maybe you want your surgeon to be an expert, and your plumber, but scientists, teachers, policy makers, bureaucrats – what do they know?

Bush didn’t “debate with myself”, which was his dismissive way of saying he didn’t need to think before deciding. War and tax cuts, deregulation and bullying were all that mattered. That said, even Bush was more sensitive to unintended consequences than Trump, though punishing differences of opinion was a big part of the Bush administration, just like it is for the felon-in-chief.

Off topic – Risks to the AI-ification of finance:

Inevitably, advances in information technology are adopted as a way to speed up – excuse me, “to improve efficiency in” – financial transactions; AI is here and is going to cause financial turbulence. Jon Danielsson, Director, of the LSE Systemic Risk Centre, is on the case:

https://cepr.org/voxeu/columns/artificial-intelligence-and-stability

Here’s his introductory paragraph:

“Private-sector financial institutions are rapidly adopting artificial intelligence (AI), motivated by promises of significant efficiency improvements. While these developments are broadly positive, AI also poses threats – which are poorly understood – to the stability of the financial system.”

I’d love to know how Danielsson arrived at “these developments are broadly positive”, but let’s put that “what’s good for finance is always good” shibboleth aside. Danielsson sees the speed and black-box nature of AI-driven financial activity as a source of risk. He’s in charge of studying systemic financial risk at the LSE, so maybe he’s on to something. After all, it’s pretty much axiomatic that financial innovation leads to crisis.

Danielsson recommends that governments set up AI-driven emergency liquidity facilities to deal with AI-generated bad things. Y’all remember the whole “what about moral hazard” objection to financial bailouts? Seems like we’ve given up on that.

Danielsson has essentially recommended that human judgement be set aside, so that any time an AI-based money-making scheme runs amok, government will lend money to the scheme and so to its backers and counter-parties. Never question, just lend. Go ahead and AI the bejesus out of this thing – we’ll back you up.

The problem Danielsson has identified is that split-second financial transactions with no human oversight and no human insight into the transaction creates systemic financial risk. His solution is split-second bailouts with no human oversight and no human insight into the transaction.

I’m willing to believe that AI is a problem. I’m not so sure Danielsson has the solution.

I’m not sure what “AI” means in this context, other than “better pattern recognition that has a greater chance of overfitting the training data and going seriously wrong”, but today’s trading algorithms certainly fit the “split-second financial transactions with no human oversight and no human insight…” definition as is.

On another note I’ve been getting 403 – permission denied errors for a long time when I try to post on this site, and I suspect, from their disappearance, that pgl has been getting them too. This post comes from a different computer, same Name and Email, so we will see if it goes through…

The troglodyte in me says “clear cookies”. Or maybe “clear cache”. Anyhow, I routinely clear both whenever I’ve been on the web, and have had only a few run-ins with 403.

It’s lonely in here.

I pointed out the http 403 error problem to Dr. Chinn last week. It is a configuration error on the server that keeps people from posting to the site. Some sort of file access configuration issue. He will have to get someone from IT to look into it. It comes and goes so might be a bit difficult to suss out. Some people might be completely locked out.

If we had competent governance, leaders who attended to actual needs and instructed the government bureaucracy to do likewise, we would not be reading this blog post. Government has bigger fish to fry than who’s loyal and who’s woke.

Here’s a for instance – what have we heard from the felon-in-chief about his plans to deal with property insurance in light of climate change? What is the plan for gradually nudging population away from areas which must eventually be abandoned, thereby preserving resources and reducing human hardship?

In case anyone is tempted to claim “we don’t know enough to deal with that”* there is abundant evidence that we do. Here’s just one example of the knowledge available to us:

https://www.businessinsider.com/climate-change-lower-home-prices-property-values-places-hit-hardest-2025-2#5-kern-county-california-1

There are lots more examples. Why are taking over Greenland and Gaza and undermining support for science and for our allies and our intelligence agencies the priorities of our felon-in-chief? Perhaps because he has no clue what to do about our real problems. Brucie?

*Some of the usual suspects might still try to argue that climate change isn’t a thing, but we shouldn’t let them distract us.